![]() https://doi.org/10.35845/kmuj.2024.23418 ORIGINAL

ARTICLE

https://doi.org/10.35845/kmuj.2024.23418 ORIGINAL

ARTICLE

Impact of Mini-CEX and DOPS on clinical competence and satisfaction among final year medical students: A comparative study

|

1: Department of Medical Education, Jinnah Medical and Dental College, Karachi, Pakistan 2: Department of Medical Education, Bahalwalpur Medical and Dental College, Bahawalpur, Pakistan 3: Undergraduate Medical Students, Jinnah Medical and Dental College, Karachi, Pakistan

Email Contact #: +92-302-2898138

Date Submitted: July 22, 2023 Date Revised: February 02, 2024 Date Accepted: February 27, 2024 |

|

THIS ARTICLE MAY BE CITED AS: Shahid Z, Fatima K, Khan Y, Agha MF, Urooj F. Impact of Mini-CEX and DOPS on clinical competence and satisfaction among final year medical students: a comparative interventional study. Khyber Med Univ J 2024;16(2):97-102. https://doi.org/10.35845/kmuj.2024.23418 |

ABSTRACT

OBJECTIVES: To compare pre- and post-mean scores of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS), and to assess supervisor and student satisfaction with Mini-CEX and DOPS over time among final year medical undergraduates.

METHODS: This study was conducted on 90 final year medical students at a Private Medical College in Karachi from May 2022 to November 2022. Pre- and post-testing involved Objective Structured Clinical Examination before and after the last session of DOPS and Mini-CEX, comprising six stations. Workshops on Mini-CEX and DOPS were conducted for supervisors and students before pre-testing. Core clinical competencies included history taking, physical examination, counselling skills, critical thinking, professionalism, organization, management skills, and overall clinical performance. Data was analysed through SPSS version-23.

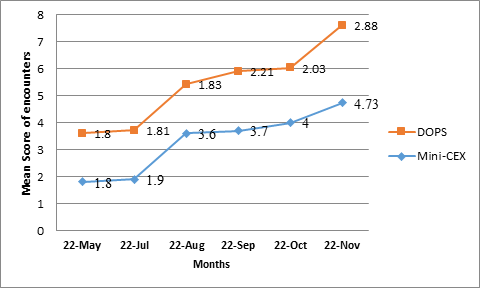

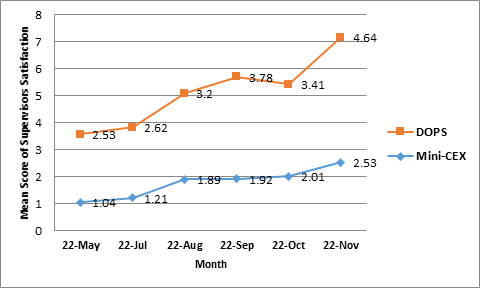

RESULTS: Over six months, 1080 encounters were conducted among 90 students in six sessions. Mean pre-test score for Mini-CEX was 13.78±3.61 and for DOPS was 11.57±3.0, with post-test scores of 43.61±3.38 and 42.28±1.8, respectively (p-value <0.0001). Management skills were most frequently assessed in Mini-CEX, while DOPS mainly evaluated counseling skills. Progressive improvement was noted across each session of Mini-CEX and DOPS, with mean scores increasing from 1.8 to 4.73 for Mini-CEX and from 1.8 to 2.88 for DOPS. Supervisor satisfaction rose from 2.53 to 4.64 for DOPS and from 1.04 to 2.53 for Mini-CEX over the six encounters. Majority of students expressed satisfaction with both assessments.

CONCLUSION: Our study demonstrated significant improvement in clinical competencies over time assessed by Mini-CEX and DOPS, with high satisfaction among students and supervisors.

KEYWORDS: Clinical Competence (MeSH); Competencies (Non-MeSH); DOPS (Non-MeSH); Mini-CEX (Non-MeSH); Workplace based Assessment (Non-MeSH).

INTRODUCTION

Competency-based assessment has become increasingly prevalent in medical education, particularly through workplace-based assessment (WPBA), which aims to enhance clinical skills.1 Among the various tools utilized, Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) stand out as effective formative assessment methods with significant educational impact.2 DOPS, in particular, is highly regarded for its ability to evaluate procedural skills in residents within a workplace setting.

Originating in the USA in 1995, Mini-CEX is a structured and validated WPBA tool specifically designed to evaluate clinical competencies among internal medicine residents. It comprises three key components: timely constructive feedback, direct observation, and assessment of clinical performance.3 Conversely, DOPS is characterized as a student-centered assessment approach, providing constructive feedback to learners that enhance their focus on essential learning points. This method empowers learners to identify their learning needs and cultivate self-reliance, as it facilitates memorization, screening, and responsive action.4As a result, WPBAs, including Mini-CEX and DOPS, are increasingly utilized in developed countries to evaluate both undergraduate and postgraduate medical training, showcasing their feasibility and effectiveness in enhancing clinical skills and competencies.1

Literature on the effectiveness of workplace-based assessments (WPBAs) in developed countries reveals inconsistencies. 1 Concurrently, numerous studies in Pakistan have been undertaken to evaluate the educational impact of Mini-CEX among residents, aiming to enhance clinical skills.5,6 Additionally, a systematic review conducted in Iran in 2018 emphasized the effectiveness of both DOPS and Mini-CEX, highlighting their positive influence on learning and high satisfaction scores among residents.4

Students have identified several major weaknesses of WPBAs, including poor test quality, the use of selected multiple assessors for all learners, test-related stress, assessor reliability, and biased assessment approaches. Despite these weaknesses, it is evident from literature that performance improves with repeated use of WPBAs.7 Mini-CEX and DOPS are not designed to determine pass/fail status but rather to identify performance gaps in clinical settings through constructive feedback. Immediate feedback from supervisors provides both quantitative and qualitative insights into training methods.5 Trainers and trainees are encouraged to repeat tasks until satisfaction is achieved, fostering experiential learning.8

In Pakistan, DOPS and Mini-CEX have been integrated into the curricula of the Fellowship of College of Physicians and Surgeons Pakistan (FCPS) since 2016.9 A recent study conducted in Karachi, Pakistan, evaluated the effectiveness of Mini-CEX among pediatric residents.10 This study addresses a significant gap in the literature by investigating the effectiveness of WPBAs among medical undergraduates using Mini-CEX and DOPS over time. At Jinnah Medical and Dental College Karachi (JMDC), Mini-CEX and DOPS have been introduced as formative assessment tools since August 2021, making them the focus of this research. The study was designed to compare the mean scores of Mini-CEX and DOPS before and after their implementation. Additionally, it was planned to assess the satisfaction levels of both supervisors and students with Mini-CEX and DOPS throughout the study duration among final year medical undergraduates. This sequential approach aimed to provide insights into the evolving effectiveness of WPBAs using Mini-CEX and DOPS and their impact on student performance and satisfaction levels over time.

METHODS

It was a pre and post-test study design conducted at Jinnah Medical and Dental College Karachi, Pakistan from May-2022 to Nov-2022. The study received ethical approval from Jinnah Medical and Dental College (JMDC), Sohail University Karachi under protocol number 000171/22.

The sample size of 90 was determined using the anticipated population of 100 on the open epi calculator. Prior to the implementation of WPBAs using Mini-CEX and DOPS, workshops were conducted separately for students and supervisors to familiarize them with the assessment tools. Both Mini-CEX and DOPS had already been integrated into the institution's curriculum since 2021, ensuring that every student underwent Mini-CEX and DOPS encounters once a month.

For the pre-testing phase, an Objective Structured Clinical Examination (OSCE) was conducted. The OSCE comprised six stations, each with eight marks. Following the completion of Mini-CEX and DOPS sessions, the same OSCE stations were administered for post-testing. Mean scores were recorded and compared thereafter.

Before administration, the Mini-CEX and DOPS evaluation forms for supervisors underwent pilot testing to ensure their effectiveness. Cronbach's alpha was calculated to assess the reliability of items in the final evaluation questionnaire, which aimed to evaluate students' performance.

Supervisors, including professors, associate professors, assistant professors, and senior registrars, participated in the study. They assessed students in all six encounters of Mini-CEX and DOPS, ensuring consistency. Prior to supervising students, supervisors attended workshops.

A total of 180 supervisors participated in assessing 1080 encounters of DOPS and Mini-CEX for 90 students. Supervisors rated encounters using a 9-point Likert scale. Scores from 1-3 indicated unsatisfactory performance, highlighting gaps in skills or knowledge and some shortcomings in patient safety or professionalism. Scores from 4-6 were considered satisfactory, covering communication skills, clinical judgment, and competence at the final year level. Scores from 7-9 were deemed excellent, surpassing the desired learning level.Additionally, student satisfaction was assessed using a five-point Likert scale, ranging from highly dissatisfied to highly satisfied.

Data collection for both WPBAs was conducted from May 2022 to November 2022, excluding the summer vacation period when final year medical students were on leave. Through purposive sampling, students who had participated in all six encounters and received feedback from supervisors for both DOPS and Mini-CEX were included in the study. Prior consent was obtained from participants, ensuring their understanding that the data would be used for research purposes. Participants were assured that their participation would not impact their internal assessment marks, which accounted for 20%, and data anonymity was maintained through coding.

A total of 90 students who had completed all six encounters of DOPS and Mini-CEX were included in the study. Students who had not attended all six encounters for any reason were excluded. Overall, there were 1080 encounters experienced across various clinical departments by these 90 final year students over the course of six months.

The core competencies assessed in the framework of Mini-CEX and DOPS included history taking, physical examination, counseling skills, critical thinking, professionalism, organization, management skills, and overall clinical performance.10

Data from the Mini-CEX and DOPS assessments of 90 students were entered into SPSS version 23. Normality of the data was assessed using the Kolmogorov-Smirnov test. For inferential statistics, the Wilcoxon sign rank test was employed to analyze pre- and post-test scores over time. Results were presented as mean scores and standard deviations for quantitative variables, and frequencies for qualitative variables. A p-value of less than 0.05 was considered statistically significant.

RESULTS

A total of 1080 encounters were recorded over six months, involving 90 students in six sessions of Mini-Clinical Evaluation Exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) individually. Among the participants, there were 38 (42.2%) males and 52 (57.8%) females. The mean age was 23.65±0.68 years. The mean academic score was 2.93±0.25, ranging from a minimum GPA of 2.0 to a maximum GPA of 3.4.

The department with the highest number of observations was medicine, with 31 encounters recorded for both Mini-CEX and DOPS. In the surgery department, the most frequently evaluated clinical competency across all four clinical settings (medicine, pediatrics, gynecology/obstetrics) in Mini-CEX was organization/efficiency, observed in 21 encounters. Conversely, management skills were the most frequently observed clinical competency during DOPS, with 17 students assessed accordingly (Table 1).

Table I: Competencies Evaluated with Mini-CEX and DOPS post electives among Final year n=90

|

Competencies evaluated in Mini-CEX |

Clinical Competencies |

Medicine |

Surgery |

Gynecology / Obstetrics |

Pediatrics |

P Value* |

|

History Taking |

2 |

1 |

1 |

1 |

0.21 |

|

|

Physical Examination |

2 |

1 |

1 |

4 |

||

|

Critical Thinking |

1 |

1 |

4 |

1 |

||

|

Counseling Skills |

3 |

1 |

4 |

1 |

||

|

Management Skills |

5 |

4 |

0 |

4 |

||

|

Organization Efficiency |

5 |

8 |

4 |

4 |

||

|

Professionalism |

8 |

5 |

4 |

1 |

||

|

Overall Clinical Competence |

5 |

2 |

1 |

1 |

||

|

Competencies evaluated in DOPS |

History Taking |

4 |

1 |

4 |

0 |

0.130 |

|

Physical Examination |

2 |

3 |

5 |

3 |

||

|

Critical Thinking |

3 |

2 |

2 |

5 |

||

|

Counseling Skills |

7 |

3 |

1 |

1 |

||

|

Management Skills |

6 |

8 |

2 |

1 |

||

|

Organization Efficiency |

4 |

4 |

1 |

4 |

||

|

Professionalism |

5 |

1 |

2 |

2 |

||

|

Overall Clinical Competence |

0 |

1 |

2 |

1 |

*Chi Square

Table I illustrates a notable increase in the mean scores of pre-test and post-test performance both Mini-CEX and DOPS. For Mini-CEX, the pre-test mean score was 13.78±3.61, while the post-test mean score was 43.61±3.38. Similarly, for DOPS, the pre-test mean score was 11.57±3.0, and the post-test mean score was 42.28±1.8 (p-value 0.0001) [Table II].

Table II: Comparing sum of pre and post mean scores of mini-clinical evaluations exercise (Mini-CEX) and direct observation of procedural skills (DOPS)

|

WPBA |

Score |

Mean + SD |

P-Value |

|

Mini-CEX |

Pre-Test |

13.78 + 3.61 |

0.0001* |

|

Post Test |

43.61 + 3.38 |

||

|

DOPS |

Pre-Test |

11.57 + 3.0 |

0.0001* |

|

Post Test |

42.28 + 1.8 |

*Wilcoxon Sign Rank Test; WPBA=work place based assessment

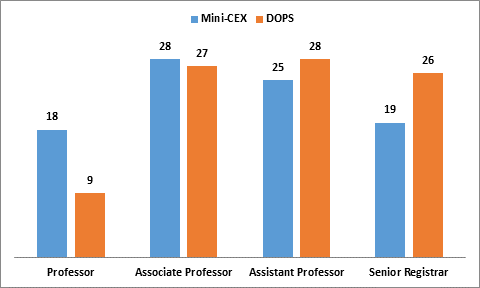

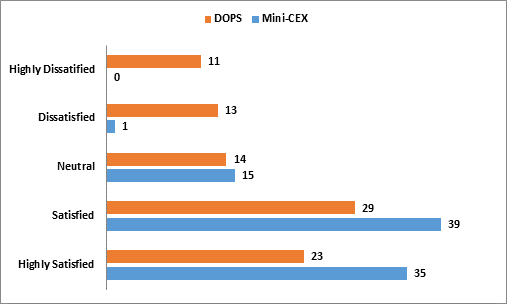

Figure 1 displays the distribution of supervisors' designations for both Mini-CEX and DOPS. Among them, associate professors supervised the highest number of encounters, comprising 35% (n=380) of both Mini-CEX and DOPS assessments. Figure 2 depicts the progressive improvement observed across each session based on the mean scores of Mini-CEX and DOPS. Mean scores increased from 1.8 to 4.73 for Mini-CEX and from 1.8 to 2.88 for DOPS. The line Figure demonstrates the enhancement of supervisor satisfaction over the six encounters of DOPS, with mean scores increasing from 2.53 to 4.64. A similar trend is observed for Mini-CEX, with mean scores rising from 1.04 to 2.53. Figure 4 illustrates that the majority of students expressed satisfaction with both DOPS and Mini-CEX.

Figure 1: Supervisors Designation in Mini-clinical Evaluations exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) (Mean Score) Encounters

Figure 2: Mean Score of every encounter of Mini-clinical evaluations exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS)

Figure 3: Supervisors Satisfaction for Mini-clinical Evaluations exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS) (Mean Score)

Figure 4: Students Satisfaction with Mini-clinical Evaluations exercise (Mini-CEX) and Direct Observation of Procedural Skills (DOPS)

DISCUSSION

This study aimed to evaluate the effectiveness of DOPS and Mini-CEX as assessment tools among undergraduate medical students, focusing on enhancing clinical competencies. Previous research has shown that repeated encounters with these tools improve clinical skills, particularly among pediatric residents.10. Key clinical competencies assessed included history taking, physical examination, counseling skills, critical thinking, professionalism, organization, management skills, and overall clinical performance. This study is the first of its kind at the undergraduate level. Significant improvement was observed in pre-test and post-test scores across all clinical competencies after six encounters with DOPS and Mini-CEX.

Significant improvement was observed in the sum of mean scores of pre-test and post-test performance for both Mini-CEX and DOPS among final year medical students across all clinical competencies over the course of six encounters. This improvement can be attributed to the feedback provided by supervisors during Mini-CEX and DOPS assessments, which helps students identify gaps in their learning and focus on areas requiring improvement.11 The provision of constructive, timely, and contextual feedback leads to progressive learning enhancement over time. Additionally, students expressed higher satisfaction when taught skills using DOPS and Mini-CEX in the dialysis unit, consistent with findings from a study by H. Jafarpoor in 2021.12

Studies from India conducted among pediatric and psychiatry residents in 201613 and 2021,14 respectively, have reported comparisons between initial and final scores of Mini-CEX encounters.

Supervisor satisfaction scores showed progressive improvement across all six encounters of Mini-CEX and DOPS, as depicted in graph 3. These findings align with a study by Azeem M, et al., (2022),15 which found that DOPS was perceived as a feasible and acceptable tool for orthodontic trainees and supervisors in a real hospital setting. Similarly, Kamat C, et al., (2021)16 utilized DOPS as an assessment tool for anesthesiology residents and found positive feedback from faculty and students, indicating enhanced procedural competencies. Additionally, DOPS was reported as a feasible assessment tool for improving surgical suturing skills among surgery residents.17

A study conducted among surgical residents in Pakistan in 202318 revealed DOPS as a practical and cost-effective assessment tool for enhancing procedural skills and future performance. Constructive feedback regarding core clinical competencies was found to be instrumental in this improvement.Additionally, a study among second-semester nursing students in 2022 demonstrated that DOPS can be integrated into students' logbooks to track the progressive development of clinical skills and professionalism.19

The effectiveness of DOPS in dental education is influenced by the presence of trained supervisors, sufficient time to run the DOPS and availability of patients as reported by Handayani NP, et al., in 2022.20

According to this study, maximum number of observations was made in medicine department n=31 with Mini-CEX and DOPS, the organization/efficiency (n=21) was the most frequent clinical competency evaluated in surgery department from all four clinical setting such as medicine, surgery, paediatric and OBS/Gynea in Mini-CEX. On the other hand, Clinical management skill n=17 was most frequently observed clinical competency in surgery department. This result is in congruent with the study of Ehsan and Usmani (2023), 10 in which mean score of organization/efficiency and clinical management skills were significantly improved.

No previous studies investigating the educational impact of WPBAs in undergraduate students in Karachi were found by the researcher, suggesting that this study may be the first of its kind in the region. This research introduces a novel approach by advocating for the integration of WPBAs as an assessment tool into the undergraduate curriculum of the Pakistan Medical and Dental Council (PMDC), with the aim of enhancing clinical competencies and performance through structured feedback. Moreover, WPBAs have the potential to evaluate soft clinical competencies such as professionalism, critical thinking, communication skills, and counseling skills, which can improve over time with repeated exposure to Mini-CEX and DOPS assessments.

However, the study has several potential weaknesses. As it is a single-centered study, the results may not be generalizable. To gather more authentic evidence, future research may consider conducting a multi-centered study.

CONCLUSION

This study found that clinical competencies were progressively enhanced through repeated application of Mini-CEX and DOPS among final year medical undergraduates at a private medical college. Furthermore, supervisor feedback and satisfaction played a crucial role in helping students identify gaps and improve their learning over time. Mini-CEX and DOPS emerged as reliable formative assessment tools for evaluating performance in clinical competencies.

ACKNOWLEDGEMENT

The authors would like to acknowledge valuable support and assistance of the research department of Jinnah Medical and Dental College Karachi and Bahawalpur Medical College, Bahawalpur.

REFERENCES

1. Lörwald AC, Lahner FM, Greif R, Berendonk C, Norcini J, Huwendiek S. Factors influencing the educational impact of Mini-CEX and DOPS: a qualitative synthesis. Med Teach 2018; 40(4):414-20. https://doi.org/10.1080/0142159X.2017.1408901

2. Martinsen SS, Espeland T, Berg EA, Samstad E, Lillebo B, Slørdahl TS. Examining the educational impact of the mini-CEX: a randomised controlled study. BMC Med Educ 2021;21:1-0. https://doi.org/10.1186/s12909-021-02670-3

3. Norcini JJ, Blank LL, Arnold GK, Kimball HR. The mini-CEX (clinical evaluation exercise): a preliminary investigation. Ann Intern Med 1995;123(10):795-9. https://doi.org/10.7326/0003-4819-123-10-199511150-00008

4. Khanghahi ME, Azar FE. Direct observation of procedural skills (DOPS) evaluation method: Systematic review of evidence. Med J Islamic Republic Iran 2018;32:45. https://doi.org/10.14196/mjiri.32.45

5. Shah MI, Qayum I, Bilal N, Ahmed S. Evaluation of an implemented Mini-CEX program for workplace-based assessment at Rehman Medical College, Peshawar, Khyber Pakhtunkhwa. Professional Med J 2021;28(9):1346-50. https://doi.org/10.29309/TPMJ/2021.28.09.6120

6. Azeem MU, Bukhari FA, Manzoor MU, Raza A, Haq A, Hamid W. Effectiveness of mini-clinical evaluation exercise (Mini-CEX) With multisource feedback as assessment tool for orthodontic PG residents. Pak J Health Med Sci 2020;14(3):647-9.

7. Dabir S, Hoseinzadeh M, Mosaffa F, Hosseini B, Dahi M, Vosoughian M et al. The effect of repeated direct observation of procedural skills (R-DOPS) assessment method on the clinical skills of anaesthesiology residents. Anaesthesiol Pain Med 2021;11(1). https://doi.org/10.5812/aapm.111074

8. Kara CO, Mengi E, Tümkaya F, Topuz B, Ardıç FN. Direct observation of procedural skills in otorhinolaryngology training. Turkish Arch Otorhinolaryngol 2018;56(1):7. https://doi.org/10.5152/tao.2018.3065

9. Alam I, Ali Z, Muhammad R, Afridi MA, Ahmed F. Mini-CEX: an assessment tool for observed evaluation of medical postgraduate residents during their training program: an overview and recommendations for its implementation in CPSP residency program. J Postgrad Med Inst 2016;30(2):110-4.

10. Ehsan S, Usmani A. Effectiveness of Mini-CEX as an assessment tool in pediatric postgraduate residents’ learning. J LiaqUni Med Health Sci 2023;22(01):50-4. https://doi.org/10.22442/jlumhs.2022.00982

11. Kim S, Willett LR, Noveck H, Patel MS, Walker JA, Terregino CA. Implementation of a mini-CEX requirement across all third-year clerkships. Teach Learn Med 2016;28:424-31. https://doi.org/10.1080/10401334.2016.1165682

12. Jafarpoor H, Hosseini M, Sohrabi M, Mehmannavazan M. The effect of direct observation of procedural skills/mini-clinical evaluation exercise on the satisfaction and clinical skills of nursing students in dialysis. J Educ Health Prom 2021;10.https://doi.org/10.4103/jehp.jehp_618_20

13. Buch PM. Impact of introduction of Mini-Clinical Evaluation Exercise in formative assessment of undergraduate medical students in pediatrics. Int J ContempPediatr 2019;6(6):2248-53.http://dx.doi.org/10.18203/2349-3291.ijcp20194700

14. Sethi S, Srivastava V, Verma P. Mini-Clinical Evaluation Exercise as a Tool for Formative Assessment of Postgraduates in Psychiatry. Int J Appl Basic Med Res 2021;11(1):27-31. https://doi.org/10.4103/ijabmr.IJABMR_305_20

15. Azeem M, Usmani A, Ashar A. Effectiveness of Direct Observation of Procedural Skills (DOPS) for improving the mini-implant insertion procedural skills of postgraduate orthodontic trainees. Pak J Med Health Sci 2022;16(05):192-4.https://doi.org/10.53350/pjmhs22165192

16. Kamat C, Todakar M, Patil M, Teli A. Changing trends in assessment: Effectiveness of Direct observation of procedural skills (DOPS) as an assessment tool in anesthesiology postgraduate students. J AnaesthesiolClinPharmacol 2022;38(2):275.https://doi.org/10.4103/joacp.JOACP_329_20

17. Inamdar P, Hota PK, Undi M. Feasibility and Effectiveness of Direct Observation of Procedure Skills (DOPS) in general surgery discipline: a pilot study. Indian J Surg 2022;84(Suppl 1):109-14.

18. Qureshi MA, Latif MZ. Experience of Dops (Direct Observation Practical Skills) by postgraduate general surgical residents of Lahore. Esculapio 2023;19(01):91-5.https://doi.org/10.51273/esc23.2519119

19. ; Mehranfard S, Pelarak F, Mashalchi H, Kalani L, Masoudiyekta L. Efficacy of logbook as a clinical assessment: using DOPS evaluation method. J Multidiscip Care 2022;11(4):185. https://doi.org/10.34172/jmdc.2022.62

20. Handayani NP, Menaldi SL, Felaza E. Exploration of the implementation of direct observation of procedural skill as an instrument for evaluation of clinical skills in dental professional education. Makassar Dent J 2022;11(1):42-7.https://doi.org/10.35856/mdj.v11i1.507

|

CONFLICT OF INTEREST Authors declared no conflict of interest, whether financial or otherwise, that could influence the integrity, objectivity, or validity of their research work. GRANT SUPPORT AND FINANCIAL DISCLOSURE Authors declared no specific grant for this research from any funding agency in the public, commercial or non-profit sectors |

|

DATA SHARING STATEMENT The data that support the findings of this study are available from the corresponding author upon reasonable request |

|

|

|

KMUJ web address: www.kmuj.kmu.edu.pk Email address: kmuj@kmu.edu.pk |